Spectral Motion Alignment significantly facilitates the capture of long-range and complex motion patterns within videos.

Input Video of Motion M1

|

Shark + M1 + Under Water

|

Spaceship + M1 + In Space

|

|---|

Input Video of Motion M2

|

Bear + M2

|

Astrounaut + M2 + On Snow

|

|---|

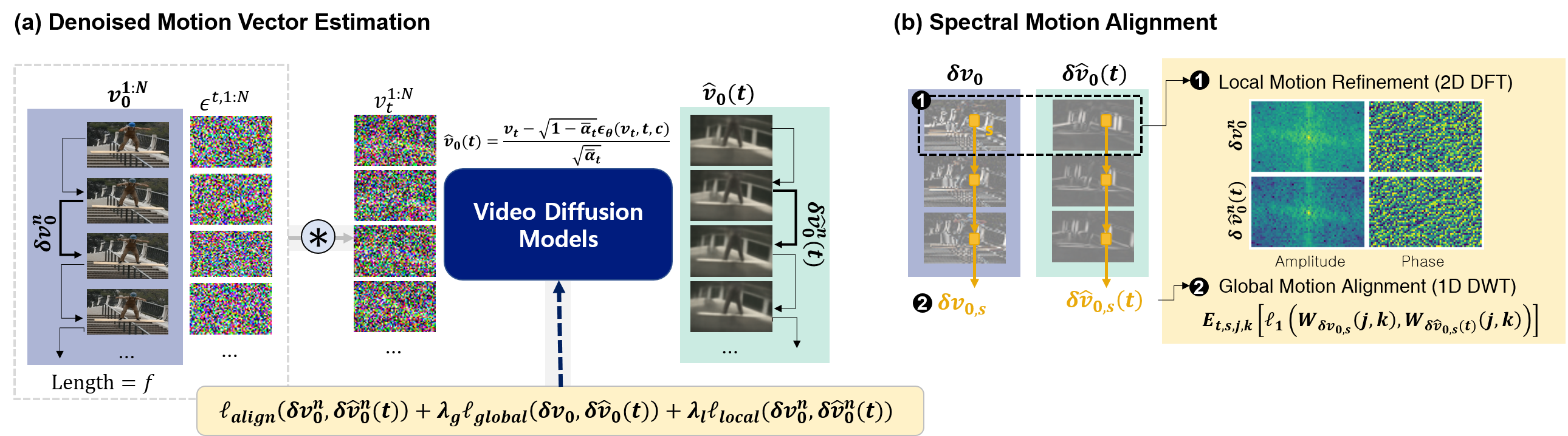

Abstract

The evolution of diffusion models has greatly impacted video generation and understanding. Particularly, text-to-video diffusion models have significantly facilitated the customization of input video with target appearance, motion, etc. Despite these advances, challenges persist in accurately distilling motion information from video frames. While existing works leverage the consecutive frame residual as the target motion vector, they inherently lack global motion context and are vulnerable to frame-wise distortions. To address this, here we present Spectral Motion Alignment (SMA), a novel framework that refines and aligns motion vectors using Fourier and wavelet transforms. SMA learns motion patterns by incorporating frequency-domain regularization, facilitating the learning of whole-frame global motion dynamics, and mitigating spatial artifacts. Extensive experiments demonstrate SMA's efficacy in improving motion transfer while maintaining computational efficiency and compatibility across various video customization frameworks.

1. Comparison within MotionDirector

Input Video of Motion M

|

MotionDirector with SMA

Chicken + M

|

MotionDirector

Chicken + M

|

|---|

Input Video of Motion M

|

MotionDirector with SMA

Eagle + M + On Edge

|

MotionDirector

Eagle + M + On Edge

|

|---|

Input Video of Motion M

|

MotionDirector with SMA

Goldfish + M

|

MotionDirector

Goldfish + M

|

|---|---|---|

|

|

MotionDirector with SMA

Airplanes + M + In the sky

|

MotionDirector

Airplanes + M + In the sky

|

Input Video of Motion M

|

MotionDirector with SMA

Tank + M + In Desert

|

MotionDirector

Tank + M + In Desert

|

|---|

2. Comparison within VMC (on Show-1)

Input Video of Motion M

|

VMC with SMA

Tanks + M + In Desert

|

VMC

Tanks + M + In Desert

|

|

|---|---|---|---|

|

|

VMC with SMA

Turtles + M + On The Sand

|

VMC

Turtles + M + On The Sand

|

Input Video of Motion M

|

VMC with SMA

Raccoon + M + Nuts

|

VMC

Raccoon + M + Nuts

|

|

|---|---|---|---|

|

|

VMC with SMA

Squirrel + M + Cherries

|

VMC

Squirrel + M + Cherries

|

Input Video of Motion M

|

VMC with SMA

Bear + M

|

VMC

Bear + M

|

|

|---|---|---|---|

|

|

VMC with SMA

Astronaut + M + On Snow

|

VMC

Astronaut + M + On Snow

|

Input Video of Motion M

|

VMC with SMA

Spaceships + M + In Space

|

VMC

Spaceships + M + In Space

|

|---|

Input Video of Motion M

|

VMC with SMA

Butterfly + M + In Snow

|

VMC

Butterfly + M + In Snow

|

|---|

3. Comparison within VMC (on Zeroscope)

Input Video of Motion M

|

VMC with SMA

Tank + M + In Snow

|

VMC

Tank + M + In Snow

|

|

|---|---|---|---|

|

|

VMC with SMA

Lamborghini + M + In Desert

|

VMC

Lamborghini + M + In Desert

|

Input Video of Motion M

|

VMC with SMA

Iron Man + M

|

VMC

Iron Man + M

|

|

|---|---|---|---|

|

|

VMC with SMA

Batman + M

|

VMC

Batman + M

|

Input Video of Motion M

|

VMC with SMA

Eagle + M + In Winter Forest

|

VMC

Eagle + M + In Winter Forest

|

|---|

4. Comparison within Tune-A-Video

Input Video of Motion M

|

Tune-A-Video with SMA

Monkey + M + On The Water

|

Tune-A-Video

Monkey + M + On The Water

|

|

|---|---|---|---|

|

|

Tune-A-Video with SMA

Lego Child + M + On Snow

|

Tune-A-Video

Lego Child + M + On Snow

|

Input Video of Motion M

|

Tune-A-Video with SMA

Eagle + M + Above Trees

|

Tune-A-Video

Eagle + M + Above Trees

|

|---|

Input Video of Motion M

|

Tune-A-Video with SMA

Lion + M + In A Bamboo Grove

|

Tune-A-Video

Lion + M + In A Bamboo Grove

|

|---|

Input Video of Motion M

|

Tune-A-Video with SMA

Flamingo + M

|

Tune-A-Video

Flamingo + M

|

|---|

5. Comparison within ControlVideo

Input Video of Motion M

|

Depth Condition

|

ControlVideo with SMA

White Puppy + M

+ On The Lawn |

ControlVideo

White Puppy + M

+ On The Lawn |

|---|

Input Video of Motion M

|

Depth Condition

|

ControlVideo with SMA

Fennec Fox + M

+ Sausage, In Desert |

ControlVideo

Fennec Fox + M

+ Sausage, In Desert |

|---|

Input Video of Motion M

|

Depth Condition

|

ControlVideo with SMA

Fox + M

|

ControlVideo

Fox + M

|

|---|

Ablation of Global and Local Motion Alignment

|

Input Video

A man is skating.

|

VMC (Show-1)

An astronaut is skating on the dune.

|

VMC( Show-1) + $\ell_{\text{local}}$

An astronaut is skating on the dune.

|

VMC (Show-1) + $\ell_{\text{local}}$ + $\ell_{\text{global}}$

An astronaut is skating on the dune.

|

|---|

|

Input Video

A rabbit is eating strawberries.

|

VMC (Show-1) + $\ell_{\text{local}}$

A hamster is eating strawberries.

|

VMC (Show-1) + $\ell_{\text{local}}$ + $\ell_{\text{global}}$

A hamster is eating strawberries.

|

|---|

|

Input Video

A car is driving on the road.

|

VMC (Zeroscope) + $\ell_{\text{local}}$

A schoolbus is driving on the grass.

|

VMC (Zeroscope) + $\ell_{\text{local}}$ + $\ell_{\text{global}}$

A schoolbus is driving on the grass.

|

|---|

References

• Zhao, Rui, et al. "Motiondirector: Motion customization of text-to-video diffusion models." arXiv preprint arXiv:2310.08465 (2023).

• Jeong, Hyeonho, et al. "VMC: Video Motion Customization using Temporal Attention Adaption for Text-to-Video Diffusion Models." arXiv preprint arXiv:2312.00845 (2023).

• Wu, Jay Zhangjie, et al. "Tune-a-video: One-shot tuning of image diffusion models for text-to-video generation." Proceedings of the IEEE/CVF International Conference on Computer Vision. 2023.

• Zhao, Min, et al. "Controlvideo: Adding conditional control for one shot text-to-video editing." arXiv preprint arXiv:2305.17098 (2023).

• Sterling, Spencer. Zeroscope. https://huggingface.co/cerspense/zeroscope_v2_576w (2023).

• Zhang, David Junhao, et al. "Show-1: Marrying pixel and latent diffusion models for text-to-video generation." arXiv preprint arXiv:2309.15818 (2023).